In Part 1 of this post I went through some of the basics of Windows AppFabric Cache and its usage. In this part I wish to cover some of the management and monitoring of your AppFabric Cache cluster, as well as some best practices and gotchas that I learned in the last few years with this product.

You can monitor health of your AppFabric cluster multiple ways:

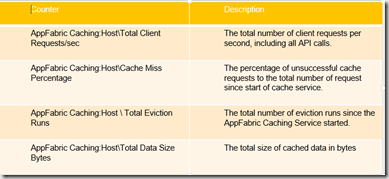

- Windows Performance Log (Perfmon) counters. There are three counter categories related to the caching features of AppFabric – AppFabric Caching:Cache, AppFabric Caching: Host, AppFabric Caching: SecondaryHost. These allow you to monitor performance and health of your installation on multiple levels from logical cache collection running across multiple cluster hosts to each individual host and finally monitor secondaries if HA is turned on. The entire counter list can be found at – http://msdn.microsoft.com/en-us/library/ff637725.aspx Some of the most useful counters are below:

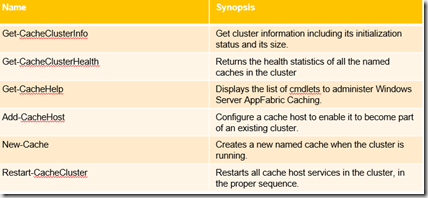

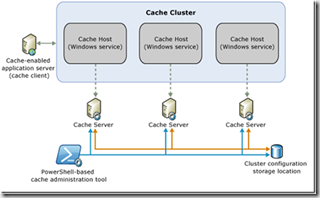

- Monitoring and management by PowerShell. PowerShell is a main vehicle for management of Windows AppFabric. Two PowerShell Modules are installed with AppFabric Cache:

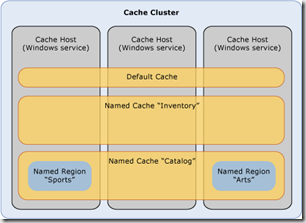

DistributedCacheAdministration and DistributedCacheConfiguration. When using PowerShell you can include these with Import-Module command. There are all together 41 cmdlets available for AppFabric in these modules. Very detailed information is on MSDN here – http://msdn.microsoft.com/en-us/library/ff718177(v=azure.10).aspx Most commonly used are below:

- Logging. Windows AppFabric Cache provides the ability to trace events on the cache client and cache host. These events are captured by enabling log sinks in the configuration settings of the client’s application configuration file or the cache host’s DistributedCache.exe.config file. By default, the cache client and cache host both have log sinks enabled. Without explicitly specifying any log sink configuration settings, each cache client automatically creates a console-based log sink with the event trace level set to Error. If you want to override this default log sink, you can explicitly configure a log sinks. There are three types of log sinks – console, file and ETW. If you want to change the cache host log sink settings from their default behavior, there are some options. By using the log element in the dataCacheConfig element, you can change the default file-based log sink’s event trace level and the location where it writes the log file. When writing to a folder outside the default location, make sure that it has been created and that the application has been granted write permissions. Otherwise, your application will throw exceptions when you enable the file-based log sink.

Eviction and Throttling. Windows Server AppFabric cache cluster uses expiration to control the amount of memory that the Caching Service uses on a cache host. Although expiration based on cache TTL (Time To Live) is normal, you may notice eviction where objects are evicted prior to TTL expiration. From an application perspective, such eviction causes applications not to find items in the cache that would otherwise be there. This means that the applications must repopulate those items, which could adversely affect application performance. There are two reasons for eviction:

- The available physical memory on the server is critically low.

- The Caching Service’s memory usage exceeds the high watermark for the cache host

Symptoms of eviction include events 118 and 115 in Operational Log for AppFabric.

When the memory consumption of the cache service on a cache server exceeds the low watermark threshold, AppFabric starts evicting objects that have already expired. When memory consumption exceeds the high watermark threshold, objects are evicted from memory, regardless of whether they have expired or not, until memory consumption goes back down to the low watermark. Subsequently cached objects may be rerouted to other hosts to maintain an optimal distribution of memory. Default Low and High Watermarks are respectively 80 and 90% of reserved size, you can use GetCache Config cmdlet to see reserved memory size (Size parameter) and watermarks. Eviction performane counters in AppFabric Cache:Host object are useful to watch for incidents of eviction and throttling.

Throttling is event that goes above and beyond eviction in severity. Essentially when physical memory becomes really low and eviction doesn’t do quick enough job freeing it – time for throttling to kick in. The cache cluster will not write data to any cache that resides on a throttled cache host until the available physical memory increases to resolve the throttled state.The most obvious symptom of throttling will come from applications. Attempts to write to the cache will generate DataCacheException errors – http://msdn.microsoft.com/en-us/library/ff921032.aspx. More on eviction and throttling – http://msdn.microsoft.com/en-us/library/ff921021.aspx, http://msdn.microsoft.com/en-us/library/ff921030.aspx.

Finally some thoughts from experience:

-

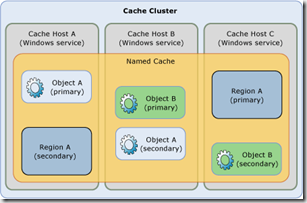

Lead Hosts. When the leadHostManagement and leadHost settings are true, the cache host is elevated to a level of increased responsibility in the cluster and designated as a lead host. In addition to the normal cache host’s operations related to caching data, the lead host also works with other lead hosts to manage the cluster operations.When lead hosts perform the cluster management role, if a majority of lead hosts fail, the entire cache cluster shuts down. Alternatively, if using SQL Server and no lead hosts if SQL Server fails the cluster will shut down. There is some additional overhead involved in lead host communication. It is a good practice to run your cluster with as few lead hosts as necessary to maintain a quorum of leads. For small clusters, ranging from one to three nodes in size, it is acceptable to run all nodes as lead nodes as the amount of additional overhead generated by a small grouping of lead hosts will be relatively low. For larger clusters, however, to minimize overhead involved in ensuring cluster stability, it is recommended to use a smaller percentage of lead hosts—for example, 5 lead hosts for a 10-node cluster. You have to balance here is added security\stability of more lead hosts vs. overhead that lead hosts suffers due to cluster management role.

-

Also its recommended not to allocate more than 16GB for the AppFabric server (and corresponding 8GB for the cache host configuration). If the cache host’s size is larger than 16GB/8GB, garbage collection could take long enough to cause a noticeable interruption for clients.A common recommendation is to spec AppFabric servers with 16GB of physical RAM, and set the cache host size to 7GB. With this arrangement, you can expect about 14GB to be used by the AppFabric process, leaving 2GB for other server processes on the host.

-

Default client setting of maxConnectionsToServer=1 will work in many situations. In scenarios, when there is a single shared DataCacheFactory and a lot of threads are posting on that connection, there may be a need to increase it. So if you are looking at a high throughput scenario, then increasing this value beyond 1 is recommended. In general you will be looking at very modest increase based on number of hosts on your cluster.

-

You should have an odd number of lead host servers when using a lead host configuration since a majority of lead hosts (> 50%) are required for the cluster to stay alive. 3, 5 or 7… etc.Use at least 4 servers if you plan to use the High Availability feature; since 3 servers are required for HA if you need to do maintenance on the cluster having an extra server will allow you to pull out a node without disrupting the cluster.

Well that pretty much does it on basics. Through the years my colleagues Rick McGuire, Ryan Berry and Xuehong Gan of Microsoft were critical in helping me and customers on AppFabric support and its with their help that I could learn this product, so huge thanks to them as well.