Although I am mainly Microsoft and Azure centric guy,after reading a bit on Google Big Query it got me interested. Therefore I decided to explore it a bit more as well here.

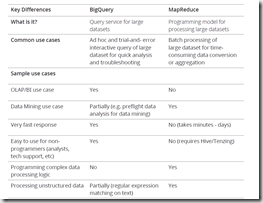

BigQuery is a RESTful web service that enables interactive analysis of massively large datasets working in conjunction with Google Storage. It is an Infrastructure as a Service (IaaS) that may be used complementarily with MapReduce. BigQuery (BQ) is reportedly based on Dremel,a scalable, interactive ad hoc query system for analysis of read-only nested data. To use the data in BigQuery, it first must be uploaded to Google Storage and in a second step imported using the BigQuery HTTP API. BigQuery requires all requests to be authenticated, supporting a number of Google-proprietary mechanisms as well as OAuth.

Lets start with what BigQuery is not. Its not either RDBMS nor MapReduce technology. As I stated BigQuery is based on internal Google technology called Dremel. Dremel is a query service that allows you to run SQL-like queries against very,very large data sets and get accurate results in mere seconds. You just need a basic knowledge of SQL to query extremely large datasets in an ad hoc manner. At Google, engineers and non-engineers alike, including analysts, tech support staff and technical account managers, use this technology many times a day. BigQuery provides the core set of features available in Dremel to third party developers. It does so via a REST API, a command line interface, a Web UI, access control and more, while

maintaining the unprecedented query performance of Dremel.

Why BigQuery is so fast? The answer can be found in two core technologies which gives BQ this unprecedented performance:

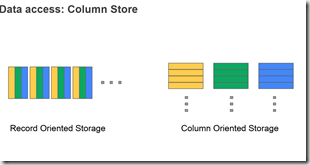

- Columnar Storage. Data is stored in a columnar storage fashion which makes possible to achieve very high compression ratio and scan throughput

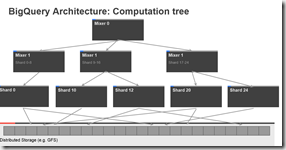

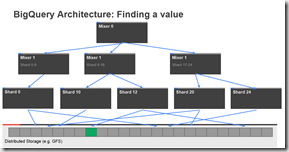

- Tree Architecture is used for dispatching queries and aggregating results across thousands of machines in a few seconds.

BigQuery stores data in its columnar storage, which means it separates a record into column values and stores each value on different storage volume, whereas traditional RDBMS normally store the whole record on one volume

Actually this technology isnt that new for anyone who dealt with DW technologies for a while, it’s a fairly hot topic today with SQL Server Column-Store Indexes and SAP HANA In-Memory Column Store for example. As you may know Column Storage has following advantages:

- Traffic minimization. Only required column values on each query are scanned and transferred on query execution. For example, a query “SELECT top(title) FROM foo” would access the title column values only.

- Higher compression ratio. One study reports that columnar storage can achieve a compression ratio of 1:10, whereas ordinary row-based storage can compress at roughly 1:3. Because each column would have similar values, especially if the cardinality of the column (variation of possible column values) is low, it’s easier to gain higher compression ratios than row-based storage.

Columnar storage has the disadvantage of not working efficiently when updating existing records. In the case of BigQuery, it simply doesn’t support any update operations. Thus the technique has been used mainly in read-only OLAP/BI type of usage. Although the technology has been popular as a data warehouse database design, Dremel\BigQuery is one of the first implementations of a columnar storage-based analytics system that harnesses the computing power of many thousands of servers and is delivered as a cloud service.

One of the challenges Google had in designing Dremel\BigQuery was how to dispatch queries and collect results across tens of thousands of machines in a matter of seconds. The challenge was resolved by using the Tree architecture. The architecture forms a massively parallel distributed tree for pushing down a query to the tree and then aggregating the results from the leaves at a blazingly fast speed.

By leveraging this architecture, Google was able to implement the distributed design for Dremel\BigQuery and realize the vision of the massively parallel columnar-based database on the cloud platform.

BigQuery provides the core set of features available in Dremel to third party developers. It does so via a REST API, command line interface, Web UI,access control, data schema management and the integration with Google Cloud Storage. BigQuery and Dremel share the same underlying architecture and performance characteristics. Users can fully utilize the power of Dremel by using BigQuery to take advantage of Google’s massive computational infrastructure. This incorporates valuable benefits like multiple replication across regions and high data center scalability. Most importantly, this infrastructure requires no management by the developer.

So why BigQuery over MapReduce? The difference here is MapReduce is batch based programming framework for very large datasets, whereas BIgQuery is an interactive data query tool for large datasets

Ok, how do I use it? Assuming you already have Google Cloud account you will have to create a new project from the dropdown in your Google Cloud Console.

Once that is done, you can navigate to project console and enable BigQuery APIs for use with your project:

Now in you left side menu you can pick BigQuery from Big Data offerings.

Before we can run any queries, we need some data! There are a couple of options here:

- Load you own data

- Use Google provided public data

For now I will settle on the second choice. I will pick Shakespeare dataset here, This dataset contains the words in Shakespeare’s works, the word_count for each word, in which corpus the word appears, and the date the corpus was written. First I will issue a simple SELECT. SELECT is the most basic clause and specifies what it is that you want to be returned by the query. FROM specifies what dataset we are using.

SELECT corpus FROM (publicdata:samples.shakespeare) GROUP BY corpus

Let’s switch gears. Say we want to count something – say, the number of words in Shakespeare’s works. Luckily, we have word_count, which represents how many times a particular word appeared in a particular corpus. We can just sum all of these values, and we are left with the total number of words that he wrote.

SELECT SUM(word_count) AS count FROM (publicdata:samples.shakespeare)

945,845 words! Pretty good – but there must surely be some duplicates. How would we query the number of unique words that he used?

SELECT COUNT(word) AS count, word FROM publicdata:samples.shakespeare GROUP BY word ORDER BY count Desc

Here we use the COUNT function to count how many words there are, and group them by word so as not to show duplicates. 32,786 unique words. Moreover I order these by mostly used words in descending order.

Ok, lets finally add WHERE clause. For example I wonder how many times Shakespeare is using word “Sir”:

SELECT word, SUM(word_count) as count FROM (publicdata:samples.shakespeare) WHERE word = "Sir" GROUP BY word ORDER BY count DESC

From results I can state that Shakespeare was really polite guy:

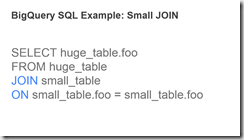

This of course is pretty basic. However, BigQuery now does allow for joins. I could take my large table and join it to smaller lookup table using standard ANSI SQL join syntax

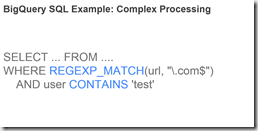

There are also some complex functions it supports like:

In the next part I am planning to upload a dataset and do some joins and more complex processing.

For more on BigQuery see – https://cloud.google.com/bigquery/web-ui-quickstart, http://martinfowler.com/articles/bigQueryPOC.html, https://support.google.com/analytics/answer/4419694?hl=en, http://googlecode.blogspot.com/2011/11/google-bigquery-service-big-data.html