Amazon DynamoDB is AWS primary NoSQL data storage offering. Announced on January 18, 2012, it is a fully managed NoSQL database service that provides fast and predictable performance along with excellent scalability. DynamoDB differs from other Amazon services by allowing developers to purchase a service based on throughput, rather than storage. Although the database will not scale automatically, administrators can request more throughput and DynamoDB will spread the data and traffic over a number of servers using solid-state drives, allowing predictable performance. It offers integration with Hadoop via Elastic MapReduce.

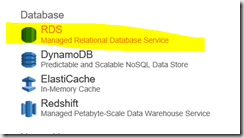

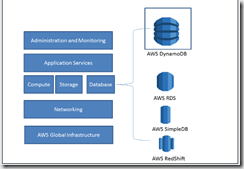

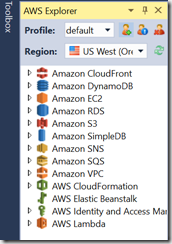

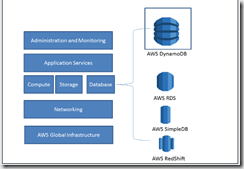

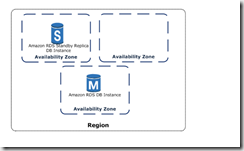

The above diagram shows how Amazon offers its various cloud services and where DynamoDB is exactly placed. AWS RDS is relational database as a service over Internet from Amazon while Simple DB and DynamoDB are NoSQL database as services. Both SimpleDB and DynamoDB are fully managed, non-relational services. DynamoDB is build considering fast, seamless scalability, and high performance. It runs on SSDs to provide faster responses and has no limits on request capacity and storage. It automatically partitions your data throughout the cluster to meet the expectations while in SimpleDB we have storage limit of 10 GB and can only take limited requests per second. Also in SimpleDB we have to manage our own partitions. So depending upon your need you have to choose the correct solution.

As I already went through basics of getting started with AWS .NET SDK in my previous post I will not go through it here. Instead there are following basics to consider:

- The AWS SDK for .NET provides three programming models for communicating with DynamoDB: the low-level model, the document model, and the object persistence model

- The low-level programming model wraps direct calls to the DynamoDB service.You access this model through the Amazon.DynamoDBv2 namespace. Of the three models, the low-level model requires you to write the most code.

- The document programming model provides an easier way to work with data in DynamoDB. This model is specifically intended for accessing tables and items in tables.You access this model through the Amazon.DynamoDBv2.DocumentModel namespace. Compared to the low-level programming model, the document model is easier to code against DynamoDB data. However, this model doesn’t provide access to as many features as the low-level programming model. For example, you can use this model to create, retrieve, update, and delete items in tables.

- The object persistence programming model is specifically designed for storing, loading, and querying NET objects in DynamoDB.You access this model through the Amazon.DynamoDBv2.DataModel namespace.

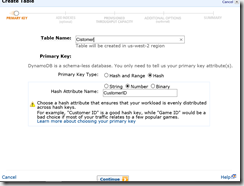

We will start by navigating to AWS console and creating a table.

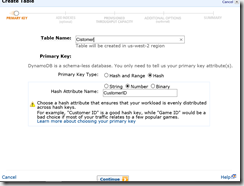

For the sake of easy tutorial I will create a table customer with couple of fields. Here I have to think about design of my table a little, in particular around hash and range keys. In DynamoDB concept of “Hash and Range Primary Key” means that a single row in DynamoDB has a unique primary key made up of both the hash and the range key.

- Hash Primary Key – The primary key is made of one attribute, a hash attribute. For example, a ProductCatalog table can have ProductID as its primary key. DynamoDB builds an unordered hash index on this primary key attribute. This means that every row is keyed off of this value. Every row in DynamoDB will have a required, unique value for this attribute. Unordered hash index means what is says – the data is not ordered and you are not given any guarantees into how the data is stored. You won’t be able to make queries on an unordered index such as Get me all rows that have a ProductID greater than X. You write and fetch items based on the hash key. For example, Get me the row from that table that has ProductID X. You are making a query against an unordered index so your gets against it are basically key-value lookups, are very fast, and use very little throughput.

- Hash and Range Primary Key – The primary key is made of two attributes. The first attribute is the hash attribute and the second attribute is the range attribute. For example, the forum Thread table can have ForumName and Subject as its primary key, where ForumName is the hash attribute and Subject is the range attribute. DynamoDB builds an unordered hash index on the hash attribute and a sorted range index on the range attribute.This means that every row’s primary key is the combination of the hash and range key. You can make direct gets on single rows if you have both the hash and range key, or you can make a query against the sorted range index. For example, get Get me all rows from the table with Hash key X that have range keys greater than Y, or other queries to that affect. They have better performance and less capacity usage compared to Scans and Queries against fields that are not indexed

In my case for my very simplistic example here I will use simple unique Hash Primary Key:

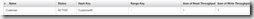

After finishing Create Table Wizard I can now see my table in the console.

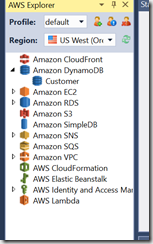

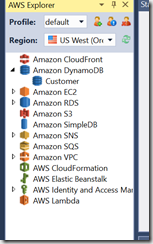

Better yet, I can now easily see and modify my table in AWS Explorer in VS, add\remove indexes, etc.:

Few words on indexing. A quick question: while writing a query in any database, keeping the primary key field as part of the query (especially in the where condition) will return results much faster compared to the other way. Why? This is because of the fact that an index will be created automatically in most of the databases for the primary key field. This the case with DynamoDB also. This index is called the primary index of the table. There is no customization possible using the primary index, so the primary index is seldom discussed. DynamoDB has concept of two types of secondary indexes:

- Local Secondary Indexes- an index that has the same hash key as the table, but a different range key. A local secondary index is “local” in the sense that every partition of a local secondary index is scoped to a table partition that has the same hash key

- Global Secondary Indexes – an index with a hash and range key that can be different from those on the table. A global secondary index is considered “global” because queries on the index can span all of the data in a table, across all partitions

Local Secondary Indexes consume throughput from the table. When you query records via the local index, the operation consumes read capacity units from the table. When you perform a write operation (create, update, delete) in a table that has a local index, there will be two write operations, one for the table another for the index. Both operations will consume write capacity units from the table. Global Secondary Indexes have their own provisioned throughput, when you query the index the operation will consume read capacity from the table, when you perform a write operation (create, update, delete) in a table that has a global index, there will be two write operations, one for the table another for the index*.

Local Secondary Indexes can only be created when you are creating the table, there is no way to add Local Secondary Index to an existing table, also once you create the index you cannot delete it.Global Secondary Indexes can be created when you create the table and added to an existing table, deleting an existing Global Secondary Index is also allowed

Important Documentation Note: In order for a table write to succeed, the provisioned throughput settings for the table and all of its global secondary indexes must have enough write capacity to accommodate the write; otherwise, the write to the table will be throttled.

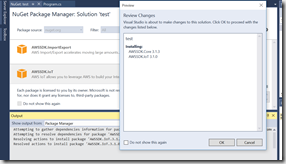

Next, I will fire up Visual Studio and create new AWS console application project:

In order to illustrate how easy it is to put and get data from table I created a very simple code snippet. First I created very simplistic class Customer, representation of some simple mythical company customer where we track unique id, name, city and state

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

using System.Threading.Tasks;

namespace DynamoDBTest

{

class Customer

{

private string _CustomerID;

private string _CompanyName;

private string _City;

private String _State;

public string CustomerID

{

get { return _CustomerID; }

set { _CustomerID = value; }

}

public string CompanyName

{

get { return _CompanyName; }

set { _CompanyName = value; }

}

public string City

{

get { return _City; }

set { _City = value; }

}

public string State

{

get { return _State; }

set { _State = value; }

}

public Customer(string CustomerID, string CompanyName,string City, string State)

{

this.CustomerID = CustomerID;

this.CompanyName = CompanyName;

this.City = City;

this.State = State;

}

public Customer()

{

}

}

}

Once that is done, I can add and get this data from DynamoDB. In my sample I used both low level and document programming models for interest:

using System;

using System.Collections.Generic;

using System.Configuration;

using System.IO;

using System.Linq;

using System.Text;

using Amazon;

using Amazon.EC2;

using Amazon.EC2.Model;

using Amazon.SimpleDB;

using Amazon.SimpleDB.Model;

using Amazon.S3;

using Amazon.S3.Model;

using Amazon.DynamoDBv2;

using Amazon.DynamoDBv2.Model;

using Amazon.DynamoDBv2.DocumentModel;

namespace DynamoDBTest

{

class Program

{

public static void Main(string[] args)

{

//lets add some sample data

AddCustomer("1","SampleCo1", "Seattle", "WA");

AddCustomer("2","SampleCo2", "Reston", "VA");

AddCustomer("3","SampleCo3", "Minneapolis", "MN");

Console.WriteLine("Added sample data");

Customer myCustomer = new Customer();

myCustomer = GetCustomerByID("1");

Console.WriteLine("Retrieved Sample Data..." + myCustomer.CustomerID + " " + myCustomer.CompanyName + " " + myCustomer.City + " " + myCustomer.State + " ");

myCustomer = GetCustomerByID("2");

Console.WriteLine("Retrieved Sample Data..." + myCustomer.CustomerID + " " + myCustomer.CompanyName + " " + myCustomer.City + " " + myCustomer.State + " ");

myCustomer = GetCustomerByID("3");

Console.WriteLine("Retrieved Sample Data..." + myCustomer.CustomerID + " " + myCustomer.CompanyName + " " + myCustomer.City + " " + myCustomer.State + " ");

Console.Read();

}

public static void AddCustomer(string CustomerID,string CompanyName,string City, string State)

{

Customer myCustomer = new Customer(CustomerID, CompanyName, City, State);

var client = new AmazonDynamoDBClient();

var myrequest = new PutItemRequest();

{

myrequest.TableName= "Customer";

myrequest.Item = new Dictionary

{

{"CustomerID",new AttributeValue {S = myCustomer.CustomerID} },

{"CompanyName", new AttributeValue {S =myCustomer.CompanyName } },

{"City", new AttributeValue {S= myCustomer.City } },

{"State", new AttributeValue {S=myCustomer.State } }

};

}

client.PutItem(myrequest);

}

public static Customer GetCustomerByID(string CustomerID)

{

var client =new AmazonDynamoDBClient();

var table = Table.LoadTable(client, "Customer");

var item = table.GetItem(CustomerID);

Customer myCustomer = new Customer(item["CustomerID"].ToString(), item["CompanyName"].ToString(), item["City"].ToString(), item["State"].ToString());

return myCustomer;

}

}

}

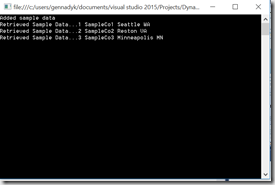

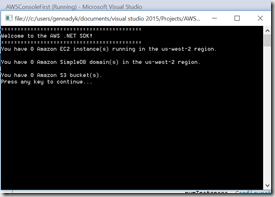

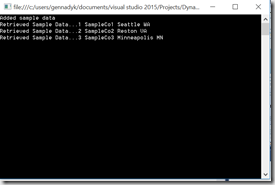

Result when I run the application are pretty simple , however you can see how easy it is to put an item and get an item, And its fast, really fast…

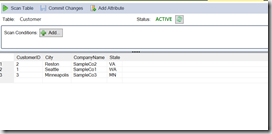

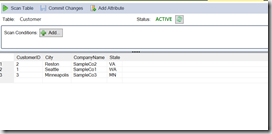

From VS AWS Explorer I can confirm rows in my table:

Hope this was useful. For more information see – http://blogs.aws.amazon.com/net/post/Tx17SQHVEMW8MXC/DynamoDB-APIs, , http://docs.aws.amazon.com/amazondynamodb/latest/developerguide/GuidelinesForLSI.html, http://yourstory.com/2012/02/step-by-step-guide-to-amazon-dynamodb-for-net-developers/,http://blog.grio.com/2012/03/getting-started-with-amazon-dynamodb.html

![mssql-to-rds-1[1] mssql-to-rds-1[1]](https://gennadny.files.wordpress.com/2015/10/mssql-to-rds-11_thumb.png?w=226&h=244)

![mssql-to-rds-5[1] mssql-to-rds-5[1]](https://gennadny.files.wordpress.com/2015/10/mssql-to-rds-51_thumb.png?w=244&h=161)