In my professional history with SQL Server I have seen this topic frequently misunderstood. I have seen consultants blaming NUMA for every single evil with certain systems and turning it off proactively going to SMP, while others never actually knew anything about NUMA and paid no attention to it. NUMA is important, but blaming it for all your SQL issues without evidence is wrong, however on the other hand you should be aware of it and how it affects SQL Server performance.

Non-uniform memory access (NUMA) is a computer memory design used in multiprocessing, where the memory access time depends on the memory location relative to the processor. Under NUMA, a processor can access its own local memory faster than non-local memory (memory local to another processor or memory shared between processors). The benefits of NUMA are limited to particular workloads, notably on servers where the data are often associated strongly with certain tasks or users.

Previous to introduction of NUMA, things were arguable simpler where architecture used was pure SMP. Symmetric multiprocessing (SMP) involves a symmetric multiprocessor system hardware and software architecture where two or more identical processors connect to a single, shared main memory, have full access to all I/O devices, and are controlled by a single operating system instance that treats all processors equally, reserving none for special purposes.

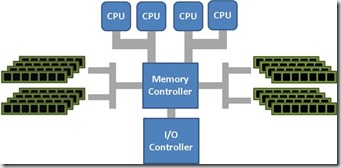

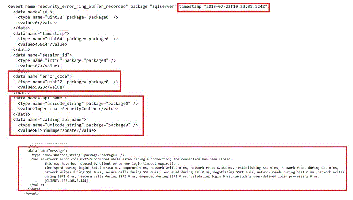

This is how SMP vs. NUMA look logically:

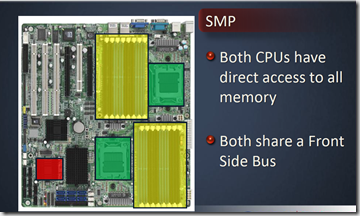

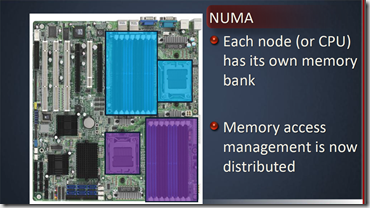

This is how its actually looking in terms of hardware motherboard:

SMP, note single memory bus for access

NUMA, note each CPU has its own memory:

Modern CPUs operate considerably faster than the main memory they use. In the early days of computing and data processing, the CPU generally ran slower than its own memory. The performance lines of processors and memory crossed in the 1960s with the advent of the first supercomputers. Since then, CPUs increasingly have found themselves “starved for data” and having to stall while waiting for data to arrive from memory. Many supercomputer designs of the 1980s and 1990s focused on providing high-speed memory access as opposed to faster processors, allowing the computers to work on large data sets at speeds other systems could not approach.

Limiting the number of memory accesses provided the key to extracting high performance from a modern computer. For commodity processors, this meant installing an ever-increasing amount of high-speed cache memory and using increasingly sophisticated algorithms to avoid cache misses. But the dramatic increase in size of the operating systems and of the applications run on them has generally overwhelmed these cache-processing improvements. Multi-processor systems without NUMA make the problem considerably worse. Now a system can starve several processors at the same time, notably because only one processor can access the computer’s memory at a time.[2]

NUMA attempts to address this problem by providing separate memory for each processor, avoiding the performance hit when several processors attempt to address the same memory. For problems involving spread data (common for servers and similar applications), NUMA can improve the performance over a single shared memory by a factor of roughly the number of processors (or separate memory banks).[3] Another approach to addressing this problem, utilized mainly by non-NUMA systems, is the multi-channel memory architecture; multiple memory channels are increasing the number of simultaneous memory accesses.

The main benefit of NUMA is scalability. The NUMA architecture was designed to surpass the scalability limits of the SMP architecture. With SMP, all memory access is posted to the same shared memory bus. This works fine for a relatively small number of CPUs, but not when you have dozens, even hundreds, of CPUs competing for access to the shared memory bus. NUMA alleviates these bottlenecks by limiting the number of CPUs on any one memory bus and connecting the various nodes by means of a high speed interconnection.

SQL Server and NUMA.

SQL Server groups schedulers to map to the grouping of CPUs, based on the hardware NUMA boundary exposed by Windows. For example, a 16-way box may have 4 NUMA nodes, each node having 4 CPUs. This allows for a greater memory locality for that group of schedulers when tasks are processed on the node. With SQL Server you can further subdivide CPUs associated with a hardware NUMA node into multiple CPU nodes. This is known as soft-NUMA. Typically, you would subdivide CPUs to partition the work across CPU nodes.

When a thread running on a specific hardware NUMA node allocates memory, the memory manager of SQL Server tries to allocate memory from the memory associated with the NUMA node for locality of reference. Similarly, buffer pool pages are distributed across hardware NUMA nodes. It is more efficient for a thread to access memory from a buffer page that is allocated on the local memory than to access it from foreign memory. For more information, see Growing and Shrinking the Buffer Pool Under NUMA.

Each NUMA node (hardware NUMA or soft-NUMA) has an associated I/O completion port that is used to handle network I/O. This helps distribute the network I/O handling across multiple ports. When a client connection is made to SQL Server, it is bound to one of the nodes. All batch requests from this client will be processed on that node.

Common Issues affecting SQL Server performance:

Local vs. Foreign memory imbalance leading to increased query latency and performance issues. This is best documented here – http://blogs.msdn.com/b/psssql/archive/2010/02/23/how-it-works-sql-server-2008-numa-and-foreign-pages.aspx.

I mentioned above idea of “local” memory to the scheduler\CPU and “remote” memory. Not only remote access is more expensive, when Microsoft SQL Server 2012 requires memory in non-uniform memory access (NUMA)-enabled environments, Windows may allocate too much memory from a remote node before allocating a page of local memory. This issue is the one fixed in Cumulative update package 3 for SQL Server 2012 Service Pack 1. Workaround without a fix is to disable NUMA by enabling trace flag 8015 when the SQL Server service starts. This workaround directs the SQL Server Memory manager to treat all memory as a single node, therefore turning off NUMA in SQL Server, just as I had to do few times in the past

How would we actually see an issue with SQL Server performance counters:

| Performance Counter | Description |

| Buffer Node: Local node page lookups/sec | Database page reads and writes that are satisfied from the local buffer pool node.

Note: This does not indicate if the memory for the page is local or remote to the node. It is possible the physical memory allocation is remote/away/foreign but assigned to the node in a steady state so the server is still doing a remote memory access. |

| Buffer Node: Remote node page lookups/sec | Database page reads and writes that are satisfied from the remote buffer pool node.

This is normally a physical, remote memory access because of the sorting that SQL Server performs. |

Bad performance on newer\bigger machines with 8 logical CPUs per NUMA node.

This best documented here – http://blogs.msdn.com/b/psssql/archive/2011/09/01/sql-server-2008-2008-r2-on-newer-machines-with-more-than-8-cpus-presented-per-numa-node-may-need-trace-flag-8048.aspx. The issue can be identified via by looking as the DMVs dm_os_wait_stats and dm_os_spin_stats for types (CMEMTHREAD and SOS_SUSPEND_QUEUE).

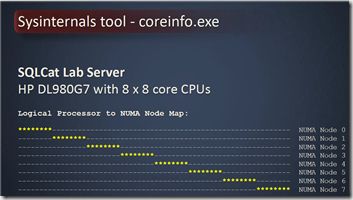

You can use SysInternals CoreInfo.exe utility to perform NUMA node alignment checks to CPUs. Please download coreinfo .exe and run it in a command window. It is best to pipe the result into a text file which can be opened with notepad. E.g. coreinfo > structure.txt. It is expected that the program execution just takes a few seconds:

The symbol ‘*’ characterizes one Logical Processors thread being a part of the processor group. The sign ‘-‘symbolizes a Logical Processors thread which is not part of a processor group.

Encountering Processor Groups which are uneven in terms of CPU resources, dealing with applications which are not Processor Group aware combined with Windows’ random assignment of such applications to one of the processor groups can cause a non-deterministic behavior of non-processor-groups aware applications. Restarting such an application might get it assigned to a different Processor Group with more or less CPU resources as before and hence cause different behavior under workload applied to it.

In order to be able to get determinable performance for SQL Server we recommend to configure Windows Server 2008 R2 to create Processor Groups with the same number of Logical Processor threads per Processor Group. In order to get there.

On Windows 2008 R2 with large number of processors easiest way to get to an even number of Logical Processors threads is to apply a QFE which got released by Microsoft in March 2011. The QFE and the related Knowledgebase Article from Microsoft can be found here: http://support.microsoft.com/kb/2510206.

You can see more on your NUMA nodes in SQL Server using sys_dm_os_schedulers and sys.dm_os_nodes

For more see – http://blogs.msdn.com/b/psssql/archive/2015/03/02/running-sql-server-on-machines-with-more-than-8-cpus-per-numa-node-may-need-trace-flag-8048.aspx, http://blogs.msdn.com/b/psssql/archive/2014/02/04/as-the-world-turns-sql-server-numa-memory-node-and-the-operating-system-proximity.aspx, http://blogs.msdn.com/b/psssql/archive/2011/11/11/sql-server-clarifying-the-numa-configuration-information.aspx