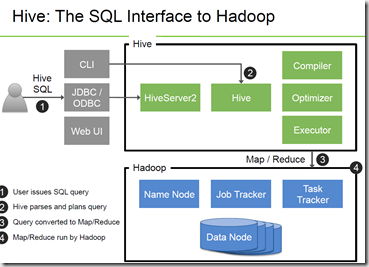

Continuing on my Big Data\Hadoop blogging journey I started from HDFS post and YARN post, I will move to my favorite Hadoop technology – Apache Hive. At its simplest definition Apache Hive is an open-source data warehouse system for querying and analyzing large datasets stored in HDFS files.

Hive has three main functions: data summarization, query and analysis. It supports queries expressed in a language called HiveQL, which automatically translates SQL-like queries into MapReduce jobs executed on Hadoop. In addition, HiveQL supports custom MapReduce scripts to be plugged into queries. Hive also enables data serialization/deserialization and increases flexibility in schema design by including a system catalog called Hive-Metastore.

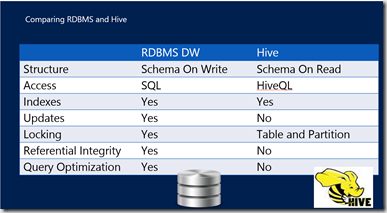

According to the Apache Hive wiki, “Hive is not designed for OLTP workloads and does not offer real-time queries or row-level updates. It is best used for batch jobs over large sets of append-only data (like web logs).” Hive supports text files (also called flat files), SequenceFiles (flat files consisting of binary key/value pairs) and RCFiles (Record Columnar Files which store columns of a table in a columnar database way.)

Hive isn’t a relational database as it only maintains metadata information about Big Data stored on HDFS. however it allows you to treat your Big Data as tables and perform SQL-like operations using HiveQL.

Hive Components. Components of Hive include HCatalog and WebHCat:

- HCatalog is a component of Hive. It is a table and storage management layer for Hadoop that enables users with different data processing tools — including Pig and MapReduce — to more easily read and write data on the grid.

- WebHCat provides a service that you can use to run Hadoop MapReduce (or YARN), Pig, Hive jobs or perform Hive metadata operations using an HTTP (REST style) interface.

Hive Data Model.

Data in Hive is organized into:

- Tables – These are analogous to Tables in Relational Databases. Tables can be filtered, projected, joined and unioned. Additionally all the data of a table is stored in a directory in HDFS. Hive also supports the notion of external tables wherein a table can be created on preexisting files or directories in HDFS by providing the appropriate location to the table creation DDL. The rows in a table are organized into typed columns similar to Relational Databases.

- Partitions – Each Table can have one or more partition keys which determine how the data is stored, for example a table T with a date partition column ds had files with data for a particular date stored in the <table location>/ds=<date> directory in HDFS. Partitions allow the system to prune data to be inspected based on query predicates, for example a query that is interested in rows from T that satisfy the predicate T.ds = ‘2008-09-01’ would only have to look at files in <table location>/ds=2008-09-01/ directory in HDFS.

- Buckets – Data in each partition may in turn be divided into Buckets based on the hash of a column in the table. Each bucket is stored as a file in the partition directory. Bucketing allows the system to efficiently evaluate queries that depend on a sample of data (these are queries that use the SAMPLE clause on the table).

Hive Metastore. The Metastore provides two important but often overlooked features of a data warehouse: data abstraction and data discovery. Without the data abstractions provided in Hive, a user has to provide information about data formats, extractors and loaders along with the query. In Hive, this information is given during table creation and reused every time the table is referenced. This is very similar to the traditional warehousing systems. The second functionality, data discovery, enables users to discover and explore relevant and specific data in the warehouse.

HiveQL Compiler and Optimizer. Just like in RDBMS HiveQL needs to be compiled and logical query plan has to be generated. Moreover, plan will be further optimized via optimizer. Just as with RDBMS Hive optimizer is moving towards cost based optimization based on statistics (SQL or Oracle anyone) – https://cwiki.apache.org/confluence/display/Hive/Cost-based+optimization+in+Hive

Enough theory – lets do Hive. Instead of installing Apache Hadoop and Hive for learning sake I would recommend that you download one of the learning VMs provided by both Cloudera and Hortonworks. In my demo I will use Hotronworks Sandbox, latest copy of that VM you can download from here – http://hortonworks.com/products/hortonworks-sandbox/#overview, if you prefer Cloudera you can get that from – http://www.cloudera.com/content/cloudera/en/downloads/quickstart_vms/cdh-5-4-x.html

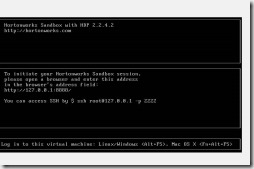

So I will launch Hortonworks sandbox on my Virtual Box:

Although I could launch hive easily command line for the purpose of this tutorial I will go easy and use very convenient Sandbox Web Console available on 127.0.0.1::8000 Console gives me number of nice visual tools such as File Browser to easily move files and visibility into hue, Pig and Hive Editors , Oozie scheduling tooling, etc.

I will use dataset on baseball statistics available from Sean Lahman – http://www.seanlahman.com/. Dataset can be downloaded from – http://seanlahman.com/files/database/lahman591-csv.zip Once we have a file we will just unzip into some temp directory. I am interested here in two files in that directory – Master,csv and Batting.csv. I can use File Explorer Utility to upload these files to hue\hdfs. File Explorer icon on the toolbar is:

Here is the utility:

Once I upload the files I can go to Beeswax – Hive Editor. To do so will click on another icon on toolbar (the one with bee on it)

Beeswax is a pretty nice editor and allows me to type in and edit my HiveQL statements, as well as get explain\execution plans for query tuning purposes (more on that in next posts).

Creating Tables. So first thing I will do is create a new table in default database provided to me. The Hive concept of a database is essentially just a catalog or namespace of tables.

However, they are very useful for larger clusters with multiple teams and users, as a

way of avoiding table name collisions. It’s also common to use databases to organize

production tables into logical groups. If you don’t specify a database, the default database is used.

The CREATE TABLE statement follows SQL conventions, but Hive’s version offers significant

extensions to support a wide range of flexibility where the data files for tables

are stored, the formats used, etc. That’s what I am doing now first:

create table temp_batting (col_value STRING);

The table we have created so far are called managed tables or sometimes called internal

tables, because Hive controls the lifecycle of their data Hive stores the data for these tables in a subdirectory under the directory defined by hive.metastore.warehouse.directory (e.g., /user/hive/warehouse), by default. There is also ability to define an external table, i.e. table where data is stored externally to Hive metastore directory.

We can get all the information we need about a table through a query too. Go back to the query editor and execute:

describe temp_batting;

The result describes the schema of the table and detailed table information. Similar to RDBMS isn’t it?

Loading data. Next I will load data into my new table from file I uploaded. This is pretty much self-explanatory:

LOAD DATA INPATH '/user/hue/Batting.csv' OVERWRITE INTO TABLE temp_batting;

Since Hive has no row-level insert, update, and delete operations, the only way to put

data into an table is to use one of the “bulk” load operations. If the LOCAL keyword is used, the path is assumed to be in the local file system. The data is copied into the final location. If LOCAL is omitted, the path is assumed to be in the distributed file system. In this case, the data is moved from the path to the final location.

Create another table. So I have table loaded with batting data ,, but I needs results table here , after all I want to get information on players doing most runs yearly.

create table batting (player_id STRING, year INT, runs INT);

Finally query:

insert overwrite table batting

SELECT

regexp_extract(col_value, '^(?:([^,]*)\,?){1}', 1) player_id,

regexp_extract(col_value, '^(?:([^,]*)\,?){2}', 1) year,

regexp_extract(col_value, '^(?:([^,]*)\,?){9}', 1) run

from temp_batting;

SELECT a.year, a.player_id, a.runs from batting a

JOIN (SELECT year, max(runs) runs FROM batting GROUP BY year ) b

ON (a.year = b.year AND a.runs = b.runs) ;

Result

Some finer points:

- We are using INSERT and SELECTs here, as well as JOIN

- SELECT is the projection operator in SQL, WHERE gives the condition of what to filter, GROUP BY gives a list of columns which specify how to aggregate the records

- INSERT OVERWRITE will overwrite any existing data in the table, whereas INSERT INTO will append data

- I use online Hive Syntax Checker here – https://sql.treasuredata.com/, to check my syntax

So this is a very basic post and I will go from there into a lot more on Hive. For more information also see – https://github.com/Prokopp/the-free-hive-book, https://altiscale.zendesk.com/hc/en-us/articles/201802346-Simple-Hive-Query-Example, http://hortonworks.com/hadoop-tutorial/hello-world-an-introduction-to-hadoop-hcatalog-hive-and-pig/, http://www.tutorialspoint.com/hive/, tutorial on Amazon – http://docs.aws.amazon.com/gettingstarted/latest/emr/getting-started-emr-load-data.html

![clip_image002[5] clip_image002[5]](https://gennadny.files.wordpress.com/2015/07/clip_image0025_thumb.jpg?w=260&h=259)

![clip_image002[10] clip_image002[10]](https://gennadny.files.wordpress.com/2015/07/clip_image00210_thumb.jpg?w=301&h=113)

![clip_image002[14] clip_image002[14]](https://gennadny.files.wordpress.com/2015/07/clip_image00214_thumb.jpg?w=244&h=153)