I have been asked by few folks on quick tutorial setting up Redis under systemd in Ubuntu Linux version 16.04.

I have blogged quite a bit about Redis in general –https://gennadny.wordpress.com/category/redis/ , however just a quick line on Redis in general. Redis is an in-memory key-value store known for its flexibility, performance, and wide language support. That makes Redis one of the most popular key value data stores in existence today. Below are steps to install and configure it to run under systemd in Ubuntu 16.04 and above.

Here are the prerequisites:

- First , you need an access to Ubuntu 16.04 server. If you dont have one, you always have an option of signing up for Microsoft Azure trial and setting up Ubuntu server there as I described previously – https://gennadny.wordpress.com/2015/03/22/using-nmnon-to-monitor-linux-system-performance-on-azure/ .

-

You will need a non-root user with sudo privileges to perform the administrative functions required for this process.

Next steps are:

- Login into your Ubuntu server with this user account

- Update and install prerequisites via apt-get

$ sudo apt-get update $ sudo apt-get install build-essential tcl - Now we can download and exgract Redis to tmp directory

$ cd /tmp $ curl -O http://download.redis.io/redis-stable.tar.gz $ tar xzvf redis-stable.tar.gz $ cd redis-stable - Next we can build Redis

$ make

- After the binaries are compiled, run the test suite to make sure everything was built correctly. You can do this by typing:

$ make test

- This will typically take a few minutes to run. Once it is complete, you can install the binaries onto the system by typing:

$ sudo make install

Now we need to configure Redis to run under systemd. Systemd is an init system used in Linux distributions to bootstrap the user space and manage all processes subsequently, instead of the UNIX System V or Berkeley Software Distribution (BSD) init systems. As of 2016, most Linux distributions have adopted systemd as their default init system.

- To start off, we need to create a configuration directory. We will use the conventional /etc/redis directory, which can be created by typing

$ sudo mkdir /etc/redi

- Now, copy over the sample Redis configuration file included in the Redis source archive:

$ sudo cp /tmp/redis-stable/redis.conf /etc/redis

- Next, we can open the file to adjust a few items in the configuration:

$ sudo nano /etc/redis/redis.conf

- In the file, find the supervised directive. Currently, this is set to no. Since we are running an operating system that uses the systemd init system, we can change this to systemd:

. . . # If you run Redis from upstart or systemd, Redis can interact with your # supervision tree. Options: # supervised no - no supervision interaction # supervised upstart - signal upstart by putting Redis into SIGSTOP mode # supervised systemd - signal systemd by writing READY=1 to $NOTIFY_SOCKET # supervised auto - detect upstart or systemd method based on # UPSTART_JOB or NOTIFY_SOCKET environment variables # Note: these supervision methods only signal "process is ready." # They do not enable continuous liveness pings back to your supervisor. supervised systemd . . .

- Next, find the dir directory. This option specifies the directory that Redis will use to dump persistent data. We need to pick a location that Redis will have write permission and that isn’t viewable by normal users.

We will use the /var/lib/redis directory for this, which we will create. . . # The working directory. # # The DB will be written inside this directory, with the filename specified # above using the 'dbfilename' configuration directive. # # The Append Only File will also be created inside this directory. # # Note that you must specify a directory here, not a file name. dir /var/lib/redis . . .

Save and close the file when you are finished

- Next, we can create a systemd unit file so that the init system can manage the Redis process.

Create and open the /etc/systemd/system/redis.service file to get started:$ sudo nano /etc/systemd/system/redis.service

- The file will should like this, create sections below

[Unit] Description=Redis In-Memory Data Store After=network.target [Service] User=redis Group=redis ExecStart=/usr/local/bin/redis-server /etc/redis/redis.conf ExecStop=/usr/local/bin/redis-cli shutdown Restart=always [Install] WantedBy=multi-user.target

- Save and close file when you are finished

Now, we just have to create the user, group, and directory that we referenced in the previous two files.

Begin by creating the redis user and group. This can be done in a single command by typing:

$ sudo chown redis:redis /var/lib/redis

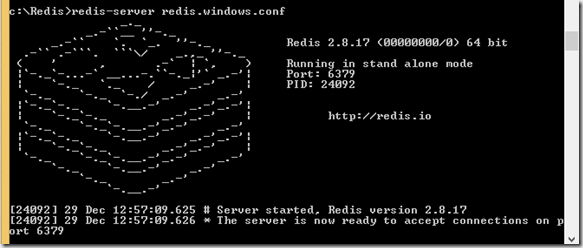

Now we can start Redis:

$ sudo systemctl start redis

Check that the service had no errors by running:

$ sudo systemctl status redis

And Eureka – here is the response

redis.service - Redis Server

Loaded: loaded (/etc/systemd/system/redis.service; enabled; vendor preset: enabled)

Active: active (running) since Wed 2016-05-11 14:38:08 EDT; 1min 43s ago

Process: 3115 ExecStop=/usr/local/bin/redis-cli shutdown (code=exited, status=0/SUCCESS)

Main PID: 3124 (redis-server)

Tasks: 3 (limit: 512)

Memory: 864.0K

CPU: 179ms

CGroup: /system.slice/redis.service

└─3124 /usr/local/bin/redis-server 127.0.0.1:6379

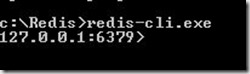

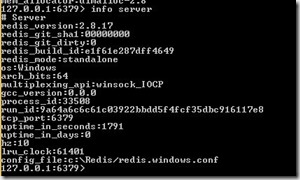

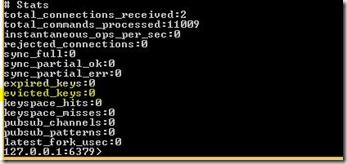

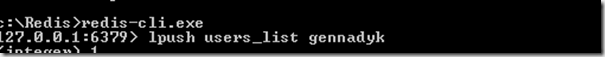

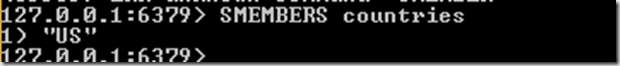

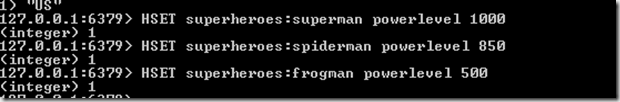

Congrats ! You can now start learning Redis. Connect to Redis CLI by typing

$ redis-cli

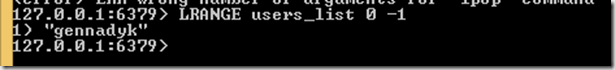

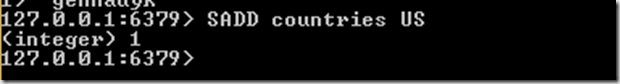

Now you can follow these Redis tutorials

- https://redis.io/commands

- http://redistogo.com/documentation/introduction_to_redis

- http://objectrocket.com/blog/how-to/top-10-redis-cli-commands

Hope this was helpful